2021 Looking at People Large Scale Signer Independent Isolated SLR CVPR Challenge

Challenge description

Overview

The Task: We are organizing a challenge on isolated sign language recognition from signer-independent non-controlled RGB-D data involving a large number of sign categories (>200).

The Dataset: A new dataset called AUTSL is considered for this challenge. It consists of 226 sign labels and 36,302 isolated sign video samples that are performed by 43 different signers in total. The dataset has been divided into three sub-datasets for user independent assessments of the models.

Dataset challenges include: variety of different backgrounds from indoor and outdoor environments, lighting variability, different postures of signers, dynamic backgrounds such as moving trees or moving people behind the signer, high intra and low inter-class variabilities, etc.

The Tracks/Phases: The challenge will be divided into two different competition tracks, i.e., RGB and multimodal RGB-D SLR. The participants are free to join any of these challenges. Both modalities have been temporally and spatially aligned. Each track will be composed of two phases, i.e., development and test phase. At the development phase, public train data will be released and participants will need to submit their predictions with respect to a validation set. At the test (final) phase, participants will need to submit their results with respect to the test data, which will be released just a few days before the end of the challenge. Participants will be ranked, at the end of the challenge, using the test data. It is important to note that this competition involves the submission of results (and not code). Therefore, participants will be required to share their codes and trained models after the end of the challenge (with detailed instructions) so that the organizers can reproduce the results submitted at the test phase, in a "code verification stage". At the end of the challenge, top ranked methods that pass the code verification stage will be considered as valid submissions to compete for any prize that may be offered.

Important Dates

Schedule already available.

Dataset

Detailed information about the dataset is provided here.

Baseline

In order to set a baseline for the evaluation of the AUTSL dataset, we experimented with several deep learning based models. We used Convolutional Neural Networks (CNNs) to extract features, unidirectional and bidirectional Long Short-Term Memory (LSTM) models to characterize temporal information. We also incorporated feature pooling modules and temporal attention to our models to improve performance. You can find the details of the baseline model in the associated publication.

How to enter the competition

The competition will be run on CodaLab platform. Click in the following links to access our CVPR'2021 Looking at People Large Scale Signer Independent Isolated SLR Challenge.

The participants will need to register through the platform, where they will be able to access the data and submit their predicitions on the validation and test data (i.e., development and test phases) and to obtain real-time feedback on the leaderboard. The development and test phases will open/close automatically based on the defined schedule.

Starting kit

We provide a submission template (".csv" file) for each phase (development and test), with evaluated samples and associated random predictions. Participants are required to make submissions using the defined templates, by only changing the random predictions by the ones obtained by their models. The predictions (scores) are integer numbers indicating the predicted class. Note, the evaluation script will verify the consistency of submitted files and may invalidate the submission in case of any inconsistency.

Development phase: Submission template (".csv" file) can be downloaded here.

Test phase: Submission template (".csv" file) can be downloaded here.

Warning: the maximum number of submissions per participant at the test stage will be set to 3. Participants are not allowed to create multiple accounts to make additional submissions. The organizers may disqualify suspicious submissions that do not follow this rule.

Making a submission

To submitt your predicted results (on each of the phases), you first have to compress your "predictions.csv" file (please, keep the filename as it is) as "the_filename_you_want.zip". Then,

sign in on Codalab -> go to our challenge webpage on codalab -> go on the "Participate" tab -> "Submit / view results" -> "Submit" -> then select your "the_filename_you_want.zip" file and -> submit.

Warning: the last step ("submit") may take few seconds (just wait). If everything goes fine, you will see the obtained results on the leaderboard ("Results" tab). Note, Codalab will keep on the leaderboard the last valid submission. This helps participants to receive real-time feedback on the submitted files. Participants are responsible to upload the file they believe will rank them in a better position as a last and valid submission.

Evaluation Metric

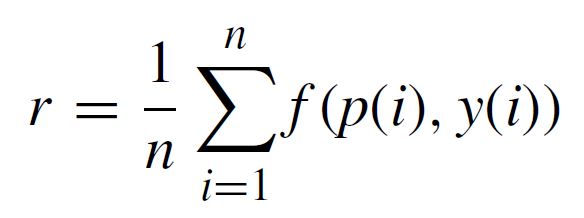

In order to evaluate the performances of the models, we use the recognition rate, r, as defined in:

where n is the total number of samples; p is the predicted label; y is the true label; if p(i) = y(i); f(p(i); y(i)) = 1, otherwise f(p(i); y(i)) = 0.

Basic Rules

According to the Terms and Conditions of the Challenge,

- "RGB track. Given the provided dataset, the participants will be asked to develop their SLR method using RGB information only."

- "RGB+D track. Given the provided dataset, the participants will be asked to develop their SLR method using RGB+Depth information only."

- "the maximum number of submissions per participant at the test stage will be set to 3. Participants are not allowed to create multiple accounts to make additional submissions. The organizers may disqualify suspicious submissions that do not follow this rule."

- "in order to be eligible for prizes, top ranked participants’ score must improve the baseline performance provided by the challenge organizers."

- "the performances on test data will be verified after the end of the challenge during a code verification stage. Only submissions that pass the code verification will be considered to be in the final list of winning methods;"

- "to be part of the final ranking the participants will be asked to fill out a survey (fact sheet) where a detailed and technical information about the developed approach is provided."

Wining solutions (post-challenge)

Important dates regarding code submission and fact sheets are provided here.

- Code verification: After the end of the test phase, top participants are required to share with the organizers the source code used to generate the submitted results, with detailed and complete instructions (and requirements) so that the results can be reproduced locally. Note, only solutions that pass the code verification stage are elegible for the prizes and to be anounced in the final list of winning solucions. Note, participants are required to share both training and prediction code with pre-trained model so that organizers can run it at only test stage if they need.

- Participants are requested to send by email to <juliojj@gmail.com> a link to a code repository with the required instructions. In this case, put in the Subject of the E-mail "CVPR 2021 SLR Challenge / Code repository and instructions"

- Fact sheets: In addition to the source code, participants are required to share with the organizers a detailed scientific and technical description of the proposed approach using the template of the fact sheets providev by the organizers. Latex template of the fact sheets can be downloaded here.

- Then, send the compressed project (in .zip format), i.e., the generated PDF, .tex, .bib and any additional files to <juliojj@gmail.com>, and put in the Subject of the E-mail "CVPR 2021 SLR Challenge / Fact Sheets"

IMPORTANT NOTE: we encourage participants to provide the instructions as more detailed and complete as possible so that the organizers can easily reproduce the results. If we face any problem during code verification, we may need to contact the authors, and this can take time and the release of the list of winners may be delayed.

Challenge Results (test phase)

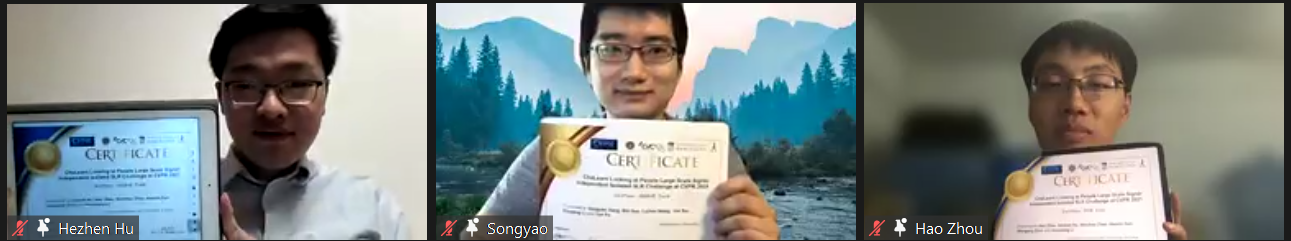

We are happy to announce the top-3 winning solutions of the CVPR 2021 Looking at People Large Scale Signer Independent Isolated SLR Challenge. These teams had their codes verified at the code verification stage, and are top winning solutions on both RGB and RGBD track.

They are:

- 1st place: smilelab2021 (SMILE-Lab@NEU team)

- 2nd place: rhythmblue (USTC-SLR team)

- 3rd place: wenbinwuee

The final leaderboard (shown on Codalab), Codes and Fact Sheets shared by the participants can be found here: RGB track & RGB+D track. The organizers would like to thank all the participants for making this challenge a success.

Associated Workshop

Check our associated CVPR'2021 ChaLearn Looking at People Sign Language Recognition in the Wild Workshop.

News

CVPR 2021 Challenge

The ChaLearn Looking at People RGB and RGBD Sign Language Recognition Challenge webpage has been released.