Hand pose recovery

Dataset description

Overview

The SyntheticHand dataset has been generated for the problem of hand pose recovery in depth images containing single frame and temporal data. We designed the dataset for multi-objective tasks including hand semantic segmentation and pose recovery.

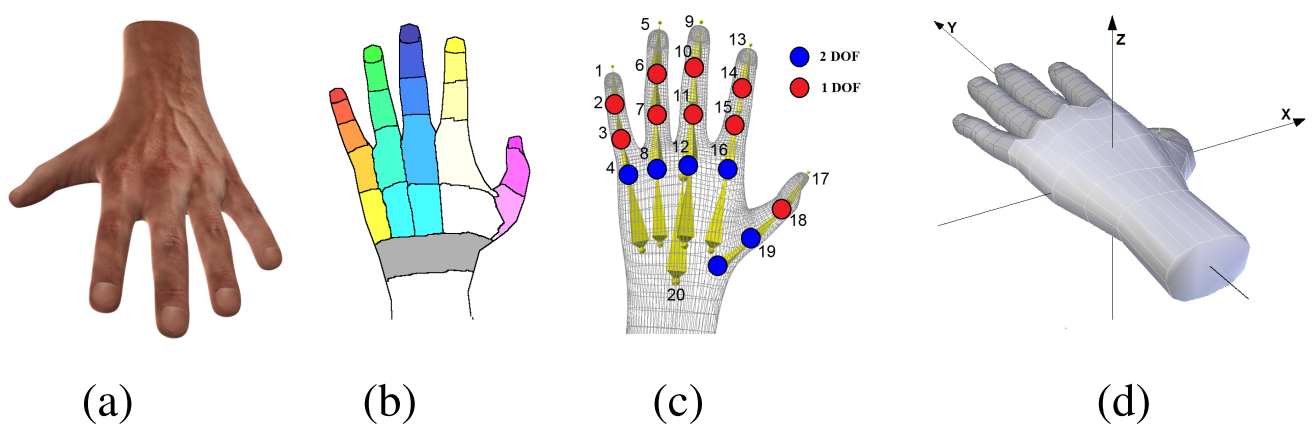

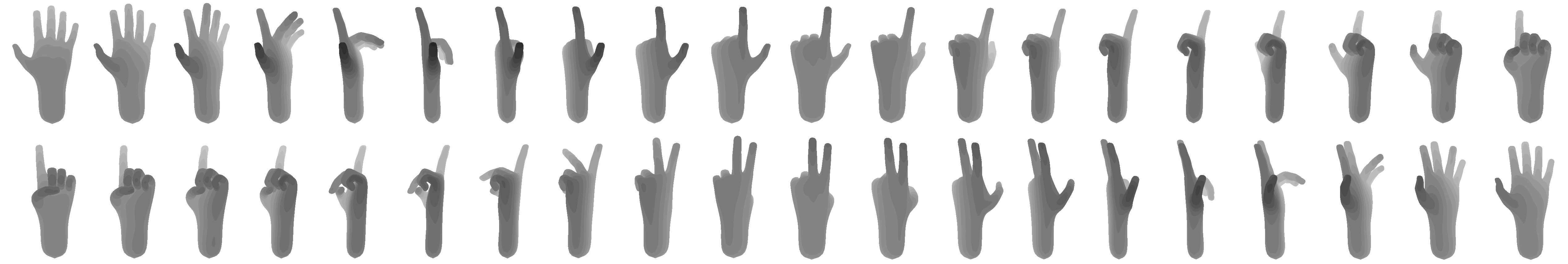

Datasets were generated with Blender 2.74 using a detailed, realistic 3D model of a human adult male hand. The model was rigged using a hand skeleton with four bones per finger, reproducing the distal, intermediate, and proximal phalanges, as well as the metacarpals. The thumb finger had no intermediate phalanx and was controlled with three bones. Additional bones were used to control palm and wrist rotation. Unfeasible hand poses were avoided by defining per-bone rotation constraints. All finger phalanges had only 1-DoF rotation (for finger flexion/extension) but metacarpals had 2-DoF rotation to allow for finger adduction/abduction. This resulted in 4-DoF per finger (except for the thumb), which proved to be enough to reproduce all reasonable poses in the context of gesture-based interaction. The animated hand model was rendered using a virtual camera reproducing the image resolution and the intrinsic parameters of the target depth sensor (Kinect-2). The virtual camera was always aiming at the hand, from a view direction which was chosen randomly from a uniform discretization of the Gauss sphere (we used 320 directions associated with the normal vectors of a subdivided icosahedron). See some sample poses in the following.

Points on the hand's surface were assigned a unique color label identifying the underlying skeleton joint. The palm center was assumed to be roughly at the metacarpals' centroid.

The dataset contains two training set: 1- over 700K single frame random samples containing of depth image, semantic segmented image and joints locations, and 2- over 1M temporal frames in reference view containing of joints locations.

The test set contains over 8K temporal frames containing of depth image, semantic segmented image and joints locations.

Citation

@article{madadiFG2017, author = {Meysam Madadi and Sergio Escalera and Alex Carruesco and Carlos Andujar and Xavier Bar\'o and Jordi Gonz\`alez}, title = {Occlusion aware hand pose recovery from sequences of depth images}, journal = {IEEE International Conference on Automatic Face and Gesture Recognition}, year = {2017} }

News

There are no news registered in Hand pose recovery