2022 Sign Spotting Challenge at ECCV

Challenge description

Overview

The Task: the challenge will use a partially annotated continuous sign language dataset of ~10 hours of video data in the health domain and will address the challenging problem of fine-grain sign spotting for continuous SLR. In this context, we want to put a spotlight on the strengths and limitations of the existing approaches, and define the future directions of the field.

challenge will use a partially annotated continuous sign language dataset of ~10 hours of video data in the health domain and will address the challenging problem of fine-grain sign spotting for continuous SLR. In this context, we want to put a spotlight on the strengths and limitations of the existing approaches, and define the future directions of the field.

The Dataset: we will use a new dataset of SL videos in the health domain (~10 hours of Continuous Spanish SL), signed by 10 deaf and interpreters with partial annotation with the exact location of 100 signs. This dataset has been collected under different conditions than the typical CSL datasets, which are mainly based on subtitled broadcast and real-time translation. In this case the signing is performed to explain health contents in sign language by reading printed cards, so reliance on signers is large due to the richer expressivity and naturalness.

The Tracks/Phases: The challenge will be divided into two different competition tracks, i.e., MSSL (multiple shot supervised learning) and OSLWL (one shot learning and weak labels). The participants are free to join any of these challenges. Each track will be composed of two phases, i.e., development and test phase. At the development phase, public train data will be released and participants will need to submit their predictions with respect to a validation set. At the test (final) phase, participants will need to submit their results with respect to the test data, which will be released just a few days before the end of the challenge. Participants will be ranked, at the end of the challenge, using the test data. It is important to note that this competition involves the submission of results (and not code). Therefore, participants will be required to share their codes and trained models after the end of the challenge (with detailed instructions) so that the organizers can reproduce the results submitted at the test phase, in a "code verification stage". At the end of the challenge, top ranked methods that pass the code verification stage will be considered as valid submissions to compete for any prize that may be offered.

Important Dates

Schedule already available.

Dataset

Detailed information about the dataset can be found here.

Baseline

TBA.

How to enter the competition

The competition will be run on CodaLab platform. Click in the following links to access our ECCV'2022 Sign Spotting Challenge.

- Track 1 - competition link - MSSL (multiple shot supervised learning): MSSL is a classical machine learning Track where signs to be spotted are the same in training, validation and test sets. The three sets will contain samples of signs cropped from the continuous stream of Spanish sign language, meaning that all of them have co-articulation influence. The training set contains the begin-end timestamps (in milisecs) annotated by a deaf person and a SL-interpreter with a homogeneous criterion (described in the dataset page) of multiple instances for each of the query signs. Participants will need to spot those signs in a set of validation videos with captured annotations. The annotations will be released when delivering the set of test videos. The annotations of the test set will be released after the challenge has finished. The signers in the test set can be the same or different to the training and validation set. Signers are men, women, right and left-handed.

- Track 2 - competition link - OSLWL (one shot learning and weak labels): OSLWL is a realistic variation of a one-shot learning problem adapted to the sign language specific problem, where it is relatively easy to obtain a couple of examples of a sign, using just a sign language dictionary, but it is much more difficult to find co-articulated versions of that specific sign. When subtitles are available, as in broadcast-based datasets, the typical approach consists of using the text to predict a likely interval where the sign might be performed. So in this track we simulate that case by providing a set of queries (isolated signs) and a set of video intervals around each and every co-articulated instance of the queries. Intervals with no instances of queries are also provided as negative groundtruth. Participants will need to spot the exact location of the sign instances in the provided video intervals. The annotations will be released when delivering the set of test queries and test video intervals. The annotations of the test set will be released after the challenge has finished.

The participants will need to register through the platform, where they will be able to access the data and submit their predicitions on the validation and test data (i.e., development and test phases) and to obtain real-time feedback on the leaderboard. The development and test phases will open/close automatically based on the defined schedule.

Starting kit

We provide a submission template (".pkl" file) for each phase (development and test), with evaluated samples and associated random predictions. Participants are required to make submissions using the defined templates, by changing the random predictions by the ones obtained by their models. That is, not only the random values but also the amount of tuples {class_id, begin_timestamp, end_timestamp} for each evaluation file. Note, the evaluation script will verify the consistency of submitted files and may invalidate the submission in case of any inconsistency.

- Track 1: submission template (".pkl" file) used on both (dev and test) phases can be downloaded here.

- Track 2: submission template (".pkl" file) used on both (dev and test) phases can be downloaded here.

Warning: the maximum number of submissions per participant at the test stage will be set to 3 (per track). Participants are not allowed to create multiple accounts to make additional submissions. The organizers may disqualify suspicious submissions that do not follow this rule.

Example from MSSL_Train_Set: download here a video file from the MSSL_Train_Set, the corresponding ELAN file, the groundtruth file in pickle format and in csv/txt format. Please read the file formats here (be aware that class numbers can vary with respect to the dataset distribution).

Making a submission

To submitt your predicted results (on each of the phases), you first have to compress your "predictions.pkl" file (please, keep the filename as it is) as "the_filename_you_want.zip". To avoid any incompatibility with different python versions, please save your pickle file using protocol = 4. Then,

sign in on Codalab -> go to our challenge webpage (and associated track) on codalab -> go on the "Participate" tab -> "Submit / view results" -> "Submit" -> then select your "the_filename_you_want.zip" file and -> submit.

Warning: the last step ("submit") may take few seconds (just wait). If everything goes fine, you will see the obtained results on the leaderboard ("Results" tab).

Note, Codalab will keep on the leaderboard the last valid submission. This helps participants to receive real-time feedback on the submitted files. Participants are responsible to upload the file they believe will rank them in a better position as a last and valid submission.

Evaluation Metric

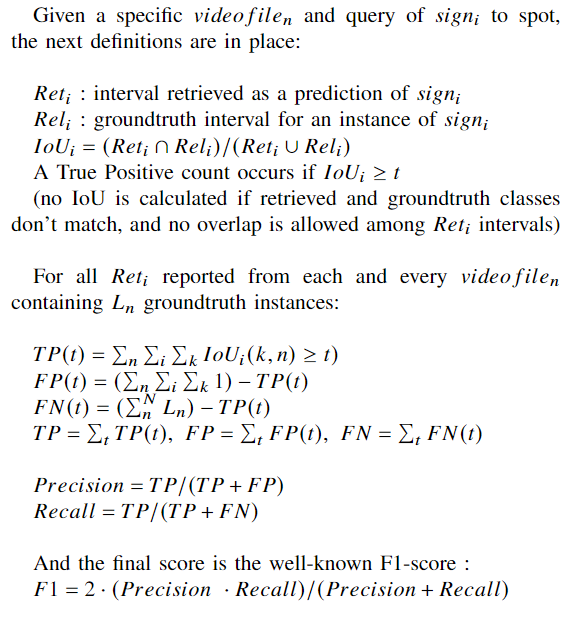

Matching score per sign instance is evaluated as the IoU of the groundtruth interval and the predicted interval. In order to allow relaxed locations the IoU threshold will be swept from 0.2 to 0.8 in 0.05 steps. True Positives and False Negatives are averaged from the 13 thresholds.

The evaluation script can be found here (new version from May 11th 2022 - bug fixed).

Basic Rules

According to the Terms and Conditions of the Challenge,

- "the maximum number of submissions per participant at the test stage will be set to 3. Participants are not allowed to create multiple accounts to make additional submissions. The organizers may disqualify suspicious submissions that do not follow this rule."

- "in order to be eligible for prizes, top ranked participants’ score must improve the baseline performance provided by the challenge organizers."

- "the performances on test data will be verified after the end of the challenge during a code verification stage. Only submissions that pass the code verification will be considered to be in the final list of winning methods;"

- "to be part of the final ranking the participants will be asked to fill out a survey (fact sheet) where a detailed and technical information about the developed approach is provided."

Note, at the test phase you may see on the leaderboard of Codalab some participants with "Entires" > N (N=3, in our case, "Max number of submissions"). This is because Codalab was not counting the failed submissions to increased the "Max number of submissions" counter.

Wining solutions (post-challenge)

Important dates regarding code submission and fact sheets are defined in the schedule.

- Code verification: After the end of the test phase, top participants are required to share with the organizers the source code used to generate the submitted results, with detailed and complete instructions (and requirements) so that the results can be reproduced locally (preferably using docker). Note, only solutions that pass the code verification stage are elegible for the prizes and to be anounced in the final list of winning solucions. Participants are required to share both training and prediction code with pre-trained model so that organizers can run it at only test stage if they need. Participants are requested to share with the organizers a link to a code repository with the required instructions. This information must be detailed inside the fact sheets (detailed next).

-

Ideally, the instructions to reproduce the code should contain:

1) how to structure the data (at train and test stage).

2) how to run any preprocessing script, if needed.

3) how to extract or load the input features, if needed.

4) how to run the docker used to run the code and to install any required libraries, if possible/needed.

5) how to run the script to perform the training.

6) how to run the script to perform the predictions, that will generate the output format of the challenge.

-

- Fact sheets: In addition to the source code, participants are required to share with the organizers a detailed scientific and technical description of the proposed approach using the template of the fact sheets providev by the organizers. Latex template of the fact sheets can be downloaded here.

Sharing the requested information with the organizers: Send the compressed project of your fact sheet (in .zip format), i.e., the generated PDF, .tex, .bib and any additional files to <juliojj@gmail.com>, and put in the Subject of the E-mail "ECCV 2022 Sign Spotting Challenge / Fact Sheets and Code repository"

IMPORTANT NOTE: we encourage participants to provide the instructions as more detailed and complete as possible so that the organizers can easily reproduce the results. If we face any problem during code verification, we may need to contact the authors, and this can take time and the release of the list of winners may be delayed.

Challenge Results (test phase)

We are happy to announce the top-3 winning solutions of the ECCV 2022 Sign Spotting Challenge. These teams had their codes verified at the code verification stage. Their fact sheets and link to code repository are available here (MSSL track) and here (OSLWL track). The organizers would like to thank all the participants for making this challenge a success.

- MSSL track:

- 1st place: ryanwong - Team Leader: Ryan Wong. Team members: Necati Cihan Camgoz and Richard Bowden (University of Surrey)

- 2nd place: th - Team Leader: Weichao Zhao. Team members: Hezhen Hu, Landong Liu, Kepeng Wu, Wengang Zhou, and Houqiang Li (University of Science and Technology of China)

- 3rd place: Random_guess - Team Leader: Xilin Chen. Team members: Yuecong Min, Peiqi Jiao, and Aiming Hao (Institute of Computing Technology, Chinese Academy of Sciences)

- OSLWL track:

- 1st place: th - Team Leader: Hezhen Hu. Team members: Landong Liu, Weichao Zhao, Hui Wu, Kepeng Wu, Wengang Zhou, and Houqiang Li (University of Science and Technology of China)

- 2nd place: Mikedddd - Team Leader: Xin Yu. Team members: Beibei Lin, Xingqun Qi, Chen Liu, Hongyu Fu, and Lincheng Li (University of Technology Sydney and Netease)

- 3rd place: ryanwong - Team Leader: Ryan Wong. Team members: Necati Cihan Camgoz and Richard Bowden (University of Surrey)

Associated Workshop

Check our associated ECCV'22 Open Challenges in Continuous Sign Language Recognition Workshop.

News

ECCV2022 Challenge on Sign Spotting

The ChaLearn ECCV2022 Challenge on Sign Spotting is open and accepting submissions on Codalab. Train data is available for download. Join us to push the boundaries of Continuous Sign Language Recognition.